While AI services like ChatGPT, Gemini and others can be a useful tool for getting tech help online, they often give wrong answers. But they still have some advantages over human help.

▶ You can also watch this video at YouTube.

▶

▶ Watch more videos about related subjects: AI (11 videos).

▶

▶ Watch more videos about related subjects: AI (11 videos).

Video Summary

In This Tutorial

Learn the strengths and weaknesses of using AI for tech support. I’ll show common mistakes AI makes, why it happens, and how to use it effectively without getting misled.

Sometimes You Get Back Incorrect Solutions (00:37)

- AI often gives plausible but wrong solutions, like missing a simple setting in System Settings.

- It may offer workarounds instead of the correct method, even after follow-up prompts.

Sometimes You Get Out-Of-Date Answers (02:13)

- AI relies on existing online information, so it can give advice for older macOS or iOS versions.

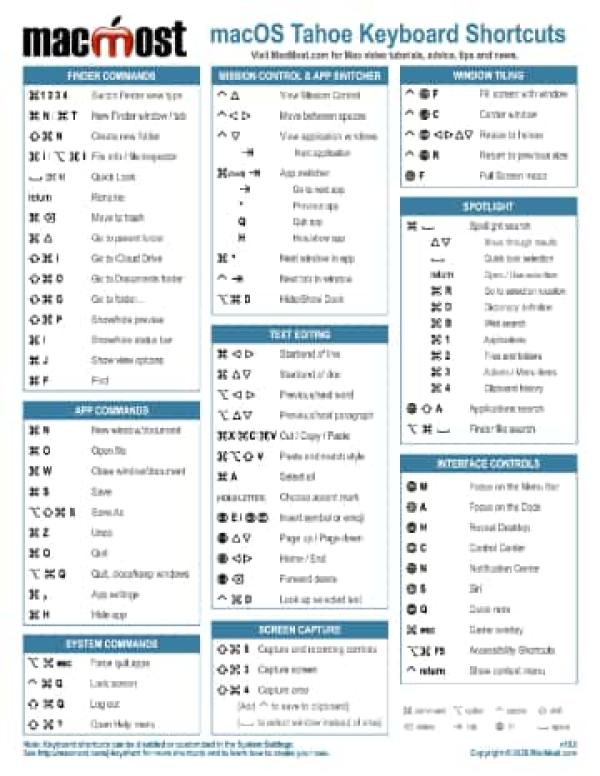

- New features, like multiple Control Centers in macOS Tahoe, may be completely missed.

Sometimes AI Makes Things Up (03:15)

- Large language models can “hallucinate,” inventing commands, menu items, or features that don’t exist.

- Example: Returning a fake terminal command like `FXEnableSnapToGrid` or claiming certain apps show live icons when they do not.

AI Doesn't Like To Just Say There Is No Solution (04:41)

- When the correct answer is “you can’t do that,” AI often invents a feature instead.

- Example: Suggesting a nonexistent “View > Note Body Width” menu in Notes.

AI Likes To Agree With You, Even If You Are Wrong (05:41)

- If you ask a question assuming a false statement, AI usually accepts it instead of correcting you.

- Example: Saying you can’t put the time in the Dock, so AI suggests alternatives but never tells you that the Clock app already does this.

These Are Also Problems With Human Tech Help (07:14)

- Humans also give wrong answers, make things up, avoid saying “no,” and agree with false assumptions.

- These are long-standing issues in forums and personal tech help.

AI Tech Help Advantages (07:38)

- AI responds instantly, unlike forums that may take hours or days.

- Even wrong answers waste only minutes instead of days.

- AI is always polite, and its accuracy will improve over time as models are updated.

Summary

AI tech help is fast and convenient but can give wrong, outdated, or completely invented answers. It rarely admits when no solution exists and often agrees with incorrect assumptions. These flaws also exist in human tech help, but AI is quicker and friendlier. Use AI as a starting point, but verify solutions and stay aware of its limitations.

Video Transcript

Hi, this is Gary with MacMost.com. Let's talk about using AI for tech help.

So there are a lot of different ways to get tech help. Besides official sources, like going through Apple Support, there are a lot of online forums, you can ask friends and family, and you can also now use AI. If you ask large model language AI like ChatGPT or Goggle Gemini questions about you get answers right away and often they are right. But sometimes not! So it is important to understand how AI can still get things wrong, at least for now.

I think most of the time when you ask Chat GPT how to do something on, say, your Mac you'll get a good answer. But not all the time. It is important to realize that. For instance, here's an example where I ask ChatGPT about how to get rid of the margin that's around windows when you do things like, say, fill the screen. The correct answer is that there is a setting for that in System Settings in Desktop & Dock. But ChatGPT didn't seem to know about that setting. It suggests other workarounds but not simply just going to that setting and switching it.

Now here's another example where ChatGPT seemed to know about the subject being asked but didn't know the right solution. This is about adding things like duration and dimensions as columns in List View in the Finder on the Mac. It seemed to know that you could do that but it didn't know how to do it. Here it suggests simply having a folder filled with only videos or only audios to be able to get this. But the solution is in fact to rename the folder to something like movies or music to get duration and pictures to get dimensions. But ChatGPT just didn't seem to know that. Even when prompted further it still didn't know the correct answer even though there are plenty of online sources that talk about this, including my own videos. As a matter of fact it goes and invents an entire idea of like a movies or music template or something like that. It is really much simpler than that and pretty easy once your know how. But somebody trying to do this just using ChatGPT to guide them won't get there.

Now remember all these large model AI's are all based on information that's out there. So things that people have written. Videos that people have made. Things like that. So it is just drawing on a body of knowledge that's already there and sometimes that body of knowledge is old. You get the same thing when you search the web for a solution and maybe you get a post from three years ago about it. It doesn't know about something new. AI falls into that same trap. Sometimes taking the old information and not looking for anything newer.

For instance, here I'm asking about adding more things to Control Center. A great new feature in macOS Tahoe is the ability to have multiple Control Centers. But there is so much knowledge from before Tahoe where you can't do that, that the answer you get from ChatGPT is inadequate. It just doesn't mention you can just add another Control Center. This happens all the time giving you advice about older versions of macOS or iOS, or if you're a programmer older versions of Swift or PHP or whatever it is you're using.

Now probably the biggest problem is that sometimes large language model AI will make stuff up! People call this hallucinating. You can see it all the time in tech questions. For instance here I'm asking for some Terminal commands. Some things I can use in the Terminal to say change how the Desktop looks. There are such commands and when asked this ChatGPT did actually return some useful things. But if you scroll through here you can quickly come to something that's just made up. It does't actually exist. You search for this fx enable snap to grid, it's not there. Nobody talks about it online. Somehow ChatGPT decided, well it would be neat if something like this existed or maybe it just does and this might be what it is called and just presented it as fact. But if you try it you'd be really confused to see it doesn't work at all.

Now I don't want to pick only on ChatGPT so I tried some others as well. So here's Quad. I've asked Quad about icons in the dock. Like notice that the calendar shows the current date. What else can do that? It gives some correct answers, like for instance, Activity Monitor will then things like FaceTime and Time Machine don't actually do anything special with the Icon in the Dock. Yet it throws them in the list here anyway.

Now large language model AI's don't like it when there is simply no answer. You ask it a question you'll almost never get a, Nope you can't do that, and that's it. Which is what someone like me will tell you. So for instance here I'm asking a common question like, How do you get the width of a table in the Notes App to be something smaller, right. It seems that when you have a lot of columns it just fills from left to right in the note. Of course the answer is you can't do anything about. There are no controls for that. But asking Goggle Gemini about it, it makes up an entire menu item, View Note Body Width, and setting it from standard to full width. That doesn't exist. That's not there. It doesn't like the fact that the answer to this is simply you can't do that. So it makes something up.

If you find these videos valuable consider joining the more than 2000 others that support MacMost through Patreon. You get exclusive content, course discounts, and more. You can read about it a macmost.com/patreon.

One last problem I want to talk about is that AI likes to agree with you, sometimes to the point of giving wrong information. So, for instance, look how this question is worded. It's fairly typical for somebody asking a question like this. There's no way on the Mac to put the time in the Dock. Where else on the screen can I see the time. Now it's pretty common to make a statement like that as part of your questions. Somebody who knows what they are doing will say, no wait it minute you actually can do that. But here ChatGPT takes that as a statement of fact and neglects to tell you that that's wrong. The obvious solution is to simply say, Oh, you can do what you said you can't do. Here it has given me a bunch of other options. Ways to see the time. Never telling me that you can actually put the Clock App in the Dock. The icon will actually shows you the time. When I actually called ChatGPT out on this notice, it gave a very human response reminding us that that is what large language models do is Imitate human responses. They are not fact machines. They are basically response machines. So when I said you can put the time in the dock, just put the Clock App Icon there. It says Correct!. Putting the Clock Icon in the dock does show the current time. Then it says well there is clarifications and what this really means and the accurate statement is that macOS does not allow you to place the Menu Bar Clock in the Dock. If you go back to my original question that is not what I'm asking at all. I never even mentioned the Menu Bar in the original question.

Now all those flaws also exist with human tech help. Humans can get things wrong. They sometimes make things up when they don't know. They don't like to just give no answer or can't do that so they will look for things that ,maybe don't don't fit and they will sometimes agree with your assumption thus making it harder to find an answer. It's not like these flaws as new. We've always had these problems getting tech help. But there are some ways that AI help is actually better than human help.

Like, for instance, you get an instant response when you ask a question. If you post something online it can be hours or days before you get the first response and maybe longer before you get a correct one. At least with AI if it gives you the wrong answer and you try it out and it doesn't work you only wasted a few minutes instead of waiting until tomorrow to get the wrong answer. Also with AI even when it gets it wrong at least it is usually polite. A lot of times in online forums you find that people are just downright rude. With human tech help there's probably not going to be any improvement in the future whereas with AI tech help I think a lot of these problems will eventually be addressed and we'll find a higher percentage of our questions get answered and answered correctly in the future.

But there is one big problem that looms out there. That's that AI gets its information from what people like me post online. So once AI starts answering all the questions and people just go to AI for the answers, well, then where will all the new material come from. When macOS 28 or 29 comes out how many new articles will be posted online and about it for AI to be trained on if everybody who posts such articles can't really do it. It's not a viable business model anymore. It's probably going to be that the future of the internet is going to be a kind of a hybrid. Like you may actually go to an encyclopedia or database for an answer to a specific question but you may just get a book on the subject if you just want to get general knowledge about it. Maybe the same thing for tech help. People like me may still keep posting How-To videos, lists of tips and advice, and things like that while Ai takes over the direct question answer part of it. But until AI tech help gets better keep in mind sometimes it can be wrong, or sometimes give an incomplete answer, of sometimes it won't know but won't admit that.

Hope you found this useful. Thanks for watching.

Excellent advice - Gary don't stop what you are doing. Your help is always helpful.

Thanks a lot Gary for this. I have been using Chatgpt, copilot etc. for many tech questions - mainly for the speed of answers and for friendly politeness, even if I ask stupid questions. Also it is very nice to know that I am not judged! However, your post here gave me some caution. I have had incomplete answers from them and sometimes have been led along a garden path. Your video is an eye opener. Thanks

I agree that AI can sometimes give the wrong answer — but I’ve had the same experience with real people too, including Apple advisers. You didn’t show the prompts you used. You really need to tell ChatGPT to act like a qualified or senior Apple Advisor, and to think things through carefully (and even double-check) before answering. Also, make sure you tell ChatGPT to focus specifically on Tahoe 26.

Bill: Yes, I point out that people are wrong too. I also show all the prompts.

Thanks bunches

You can really cut down on the mistakes AI will make when asking for technical help by including in the prompt what version of the operating system or app or whatever else you’re inquiring about. The more explicit prompt, the more likely you already get a better answer.

Arnie: Same is true when asking a human. 90% of the questions I am asked are lacking all the info I need to give an answer.

Gary, I hate to say this, but you're using the wrong prompts.

Bill: I don’t think I made myself clear here in the video. I obviously don”t need the answers to these questions. I am typing in what I think the general public would be typing in to get answers. This is based on 18 years of being the recipient of these questions.